Sneaky React Memory Leaks: How the React compiler won't save you

July 7, 2024

Some weeks ago, I wrote an article about how closures can lead to memory leaks in React when used in combination with memoization hooks like useCallback or useEffect. The article gained some attention in a couple of awesome newsletters such as TLDR WebDev, https://bytes.dev/, This Week In React and on X. (Thanks for the shout-outs, by the way! 🙏)

Naturally, a number of people wondered how the leak I demonstrated will be handled by the new React compiler. This was the response from the React team:

the compiler will cache these values so they won't keep getting re-allocated and memory won't grow infinitely

— Sathya Gunasekaran (@_gsathya) May 29, 2024

This sounds promising, right? But, as always, the devil is in the details. Let's dive into the topic and see how the React compiler is handling the code from the previous article.

TLDR for the busy folks: The React compiler will cache values that don't depend on anything else, so they won't keep getting re-allocated. This will prevent memory leaks caused by closures. However, it won't save you from memory leaks caused by closures that depend on other values. You still need to be aware of the issue and write your code accordingly.

Recap: Closures and memory leaks

In the previous article, we discussed how closures can lead to memory leaks in React. The gist:

- Closures capture variables from their outer scope, in React components, this often means capturing state, props or memoized values.

- All closures in the component share the same dictionary-style object (context object) in which the captured variables are stored. (A single object per component, not per inner closure.)

- The context object is created once when the component function is called and is kept alive until the last closure can be garbage collected.

- Each captured variable will be added to this object. From all sibling closures, even unused ones.

- If we cache/memoize any of the closures, they will keep the entire context object alive, even if the other closures are not used anymore.

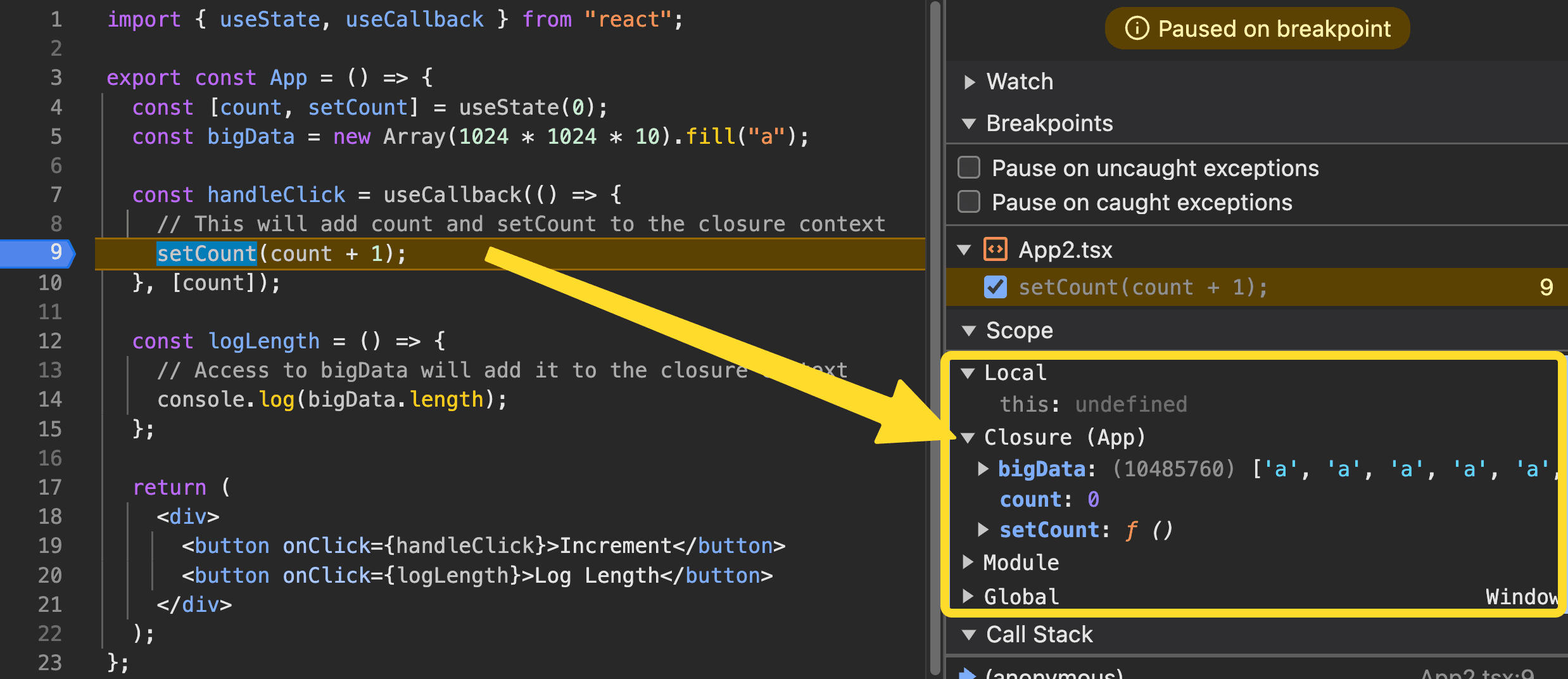

This is especially critical if any of the closures references a large object, like a big array. In the screenshot below we can clearly see that handleClick has access to the scope: "Closure (App)". This scope also holds bigData, which is only used in logLength.

Shared closure with big array accessible from sibling closure

Shared closure with big array accessible from sibling closure

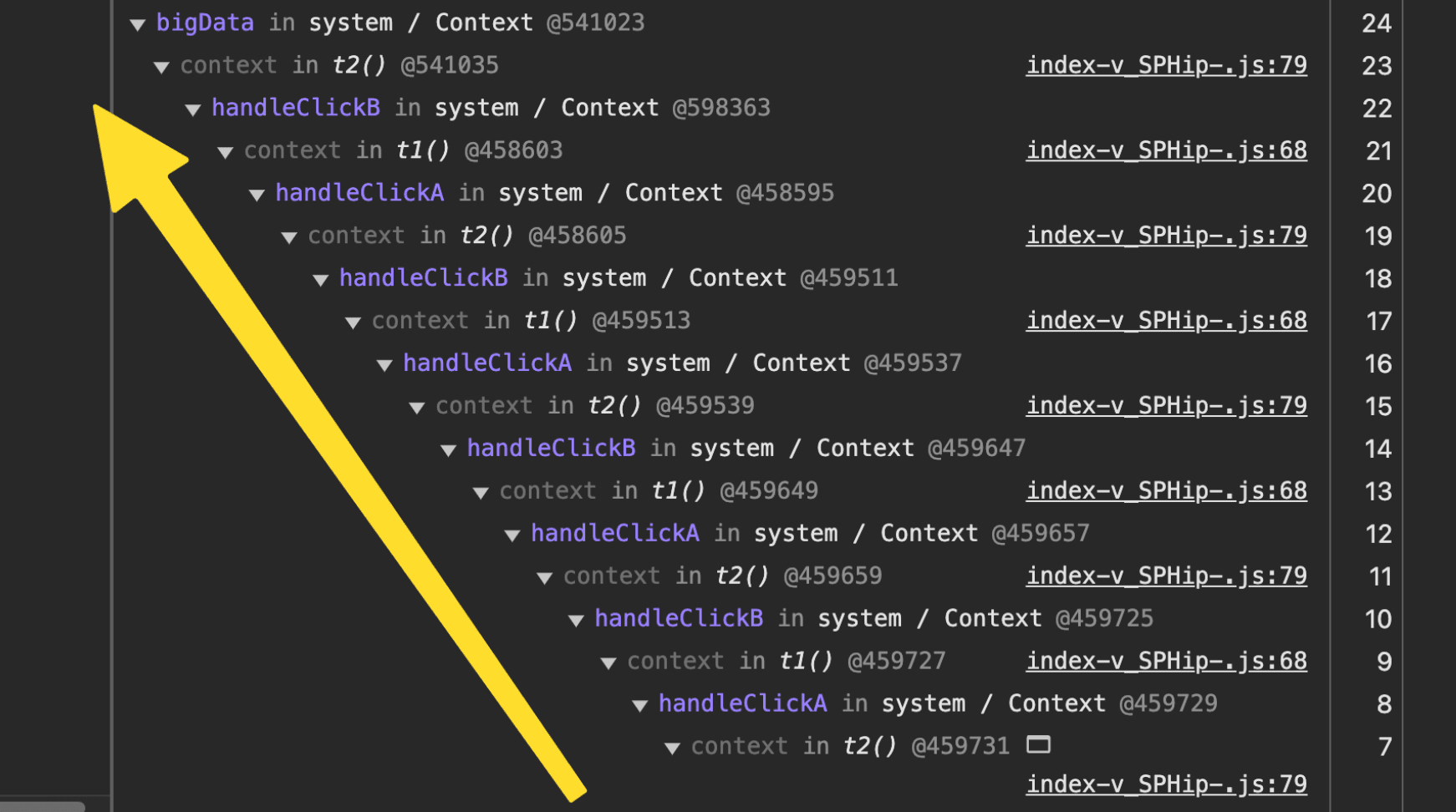

It can also have fun side effects like deeply nested useCallback reference chains. Here we can see how changing state in an unfortunate way can lead to a long chain of useCallback memoized functions:

It's

It's handleClicks all the way down

All in all, this is a fundamental "issue" with functional-style JavaScript and not React. However, React's heavy usage of closures and memoization makes it much more likely to run into this issue. You might even say that heavily relying on a functional programming paradigm in a language that wasn't designed for it has some systemic downsides. (But, that's another topic.)

The React compiler

You might have heard about the new React compiler. The React team is hard at work on a new build tool that will transform your code into a more optimized version. It's still in active development, but the goal is to delegate all memoization to the compiler. This way, we shouldn't have to worry about where to put our useCallback anymore, and can keep your code concise and readable.

If we believe the X reply about the closure leak, the compiler will cache "something", and this will then prevent the leak. But, what exactly is it caching? And how does it prevent the leak? Let's find out.

The leaking code

This is the code from the previous article, where alternatingly clicking the "Increment A" and "Increment B" buttons will lead to a new allocation of the BigObject that will never get garbage collected:

import { useState, useCallback } from "react";

class BigObject {

public readonly data = new Uint8Array(1024 * 1024 * 10);

}

export const App = () => {

const [countA, setCountA] = useState(0);

const [countB, setCountB] = useState(0);

const bigData = new BigObject(); // 10MB of data

const handleClickA = useCallback(() => {

setCountA(countA + 1);

}, [countA]);

const handleClickB = useCallback(() => {

setCountB(countB + 1);

}, [countB]);

// This only exists to demonstrate the problem

const handleClickBoth = () => {

handleClickA();

handleClickB();

console.log(bigData.data.length);

};

return (

<div>

<button onClick={handleClickA}>Increment A</button>

<button onClick={handleClickB}>Increment B</button>

<button onClick={handleClickBoth}>Increment Both</button>

<p>

A: {countA}, B: {countB}

</p>

</div>

);

};

Runtime behavior after using the React compiler

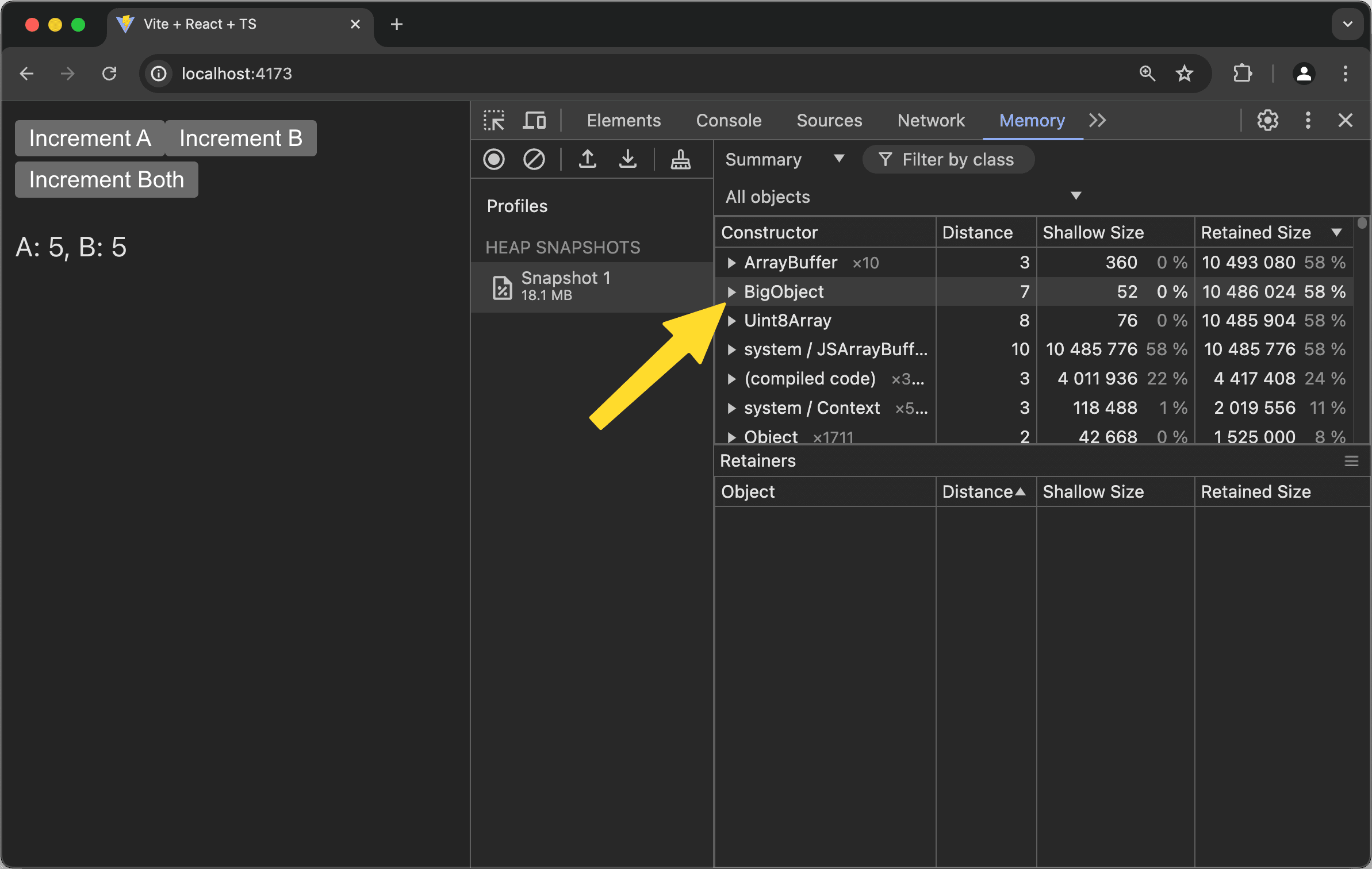

If we run this code through the React compiler and try to reproduce the memory leak, we will see that the memory usage stays constant, and we only have a single instance of BigObject allocated in the memory snapshot.

Only a single

Only a single BigObject is allocated, and the memory usage stays constant.

This looks promising, right? But, let's take a closer look at the compiled code to see what the compiler is doing.

The compiled code in detail

I added the React compiler to the Vite project and ran the code through it. The compiler output is a bit verbose, but the relevant part is this:

const App = () => {

const $ = compilerRuntimeExports.c(22);

const [countA, setCountA] = reactExports.useState(0);

const [countB, setCountB] = reactExports.useState(0);

let t0;

if ($[0] === Symbol.for("react.memo_cache_sentinel")) {

t0 = new BigObject();

$[0] = t0;

} else {

t0 = $[0];

}

const bigData = t0;

// ...

};

You can also check out the great React compiler playground here to see the full output.

The compiler has added a new variable $ that is used to cache the memoized values. It also inlined the reference checks that invalidate the cached value in the if(). This is what happened behind the scenes with the dependency array.

The BigObject is now only created once and stored in the cache. This prevents the memory leak, as the BigObject is no longer created every time we render the component.

Since BigObject has no dependencies on any state or props, the compiler assumes it can be safely cached. It's equivalent to writing const bigData = useMemo(() => new BigObject(), []) in the original code.

💡 This shows why it's important to write pure components in React. Each render should produce the same output given the same props and state. My example violated this rule by creating a new

BigObjectduring each render and it relied on these new instances to demonstrate the memory leak.

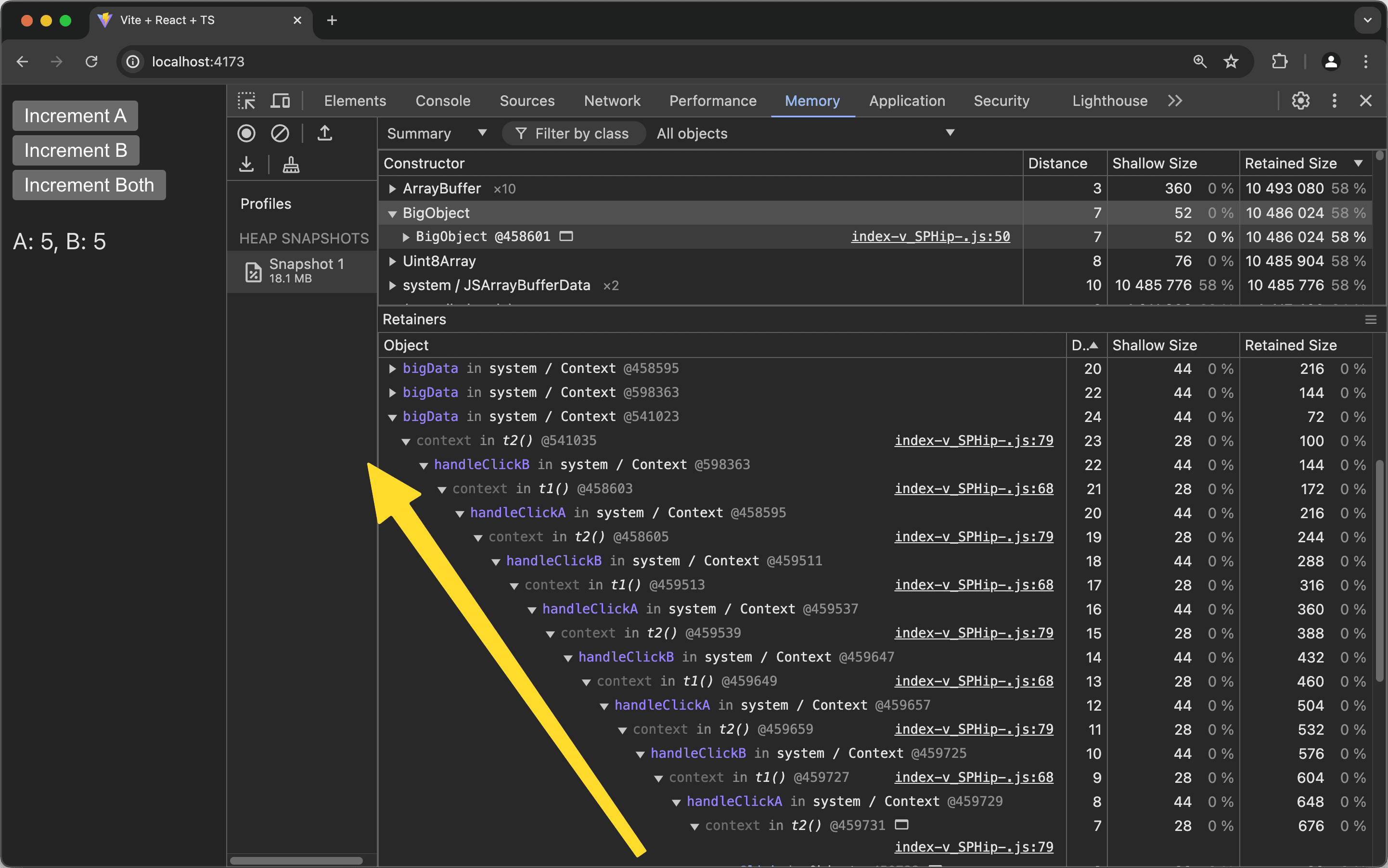

This is what Sathya meant in his reply on X. The React compiler will cache the values that don't depend on anything else, so they won't keep getting re-allocated. Unfortunately, this doesn't solve the closure issue and only shows that my example doesn't work any longer. We can easily verify this by looking at the retainer with the longest distance in the Chrome DevTools:

We still have a long alternating reference chain of various callbacks.

We still have a long alternating reference chain of various callbacks.

Changing the code to demonstrate the leak again

Now we understand how the React compiler works, we can easily change the code to bring our lovely memory leak back:

import { useState, useCallback } from "react";

class BigObject {

constructor(public state: string) {}

public readonly data = new Uint8Array(1024 * 1024 * 10);

}

export const App = () => {

const [countA, setCountA] = useState(0);

const [countB, setCountB] = useState(0);

const bigData = new BigObject(`${countA}/${countB}`); // 10MB of data

// The rest of the code stays the same

// ...

};

Now, we pass the countA and countB state to the BigObject constructor it will create a new instance for each state change. Incidentally, this is also a little closer to the original production issue we faced that triggered the first article.

If we now check the compiled result we will see the dependency on the countA and countB state:

const App = () => {

const $ = compilerRuntimeExports.c(24);

const [countA, setCountA] = reactExports.useState(0);

const [countB, setCountB] = reactExports.useState(0);

const t0 = `${countA}/${countB}`;

let t1;

if ($[0] !== t0) {

t1 = new BigObject(t0);

$[0] = t0;

$[1] = t1;

} else {

t1 = $[1];

}

const bigData = t1;

// ...

};

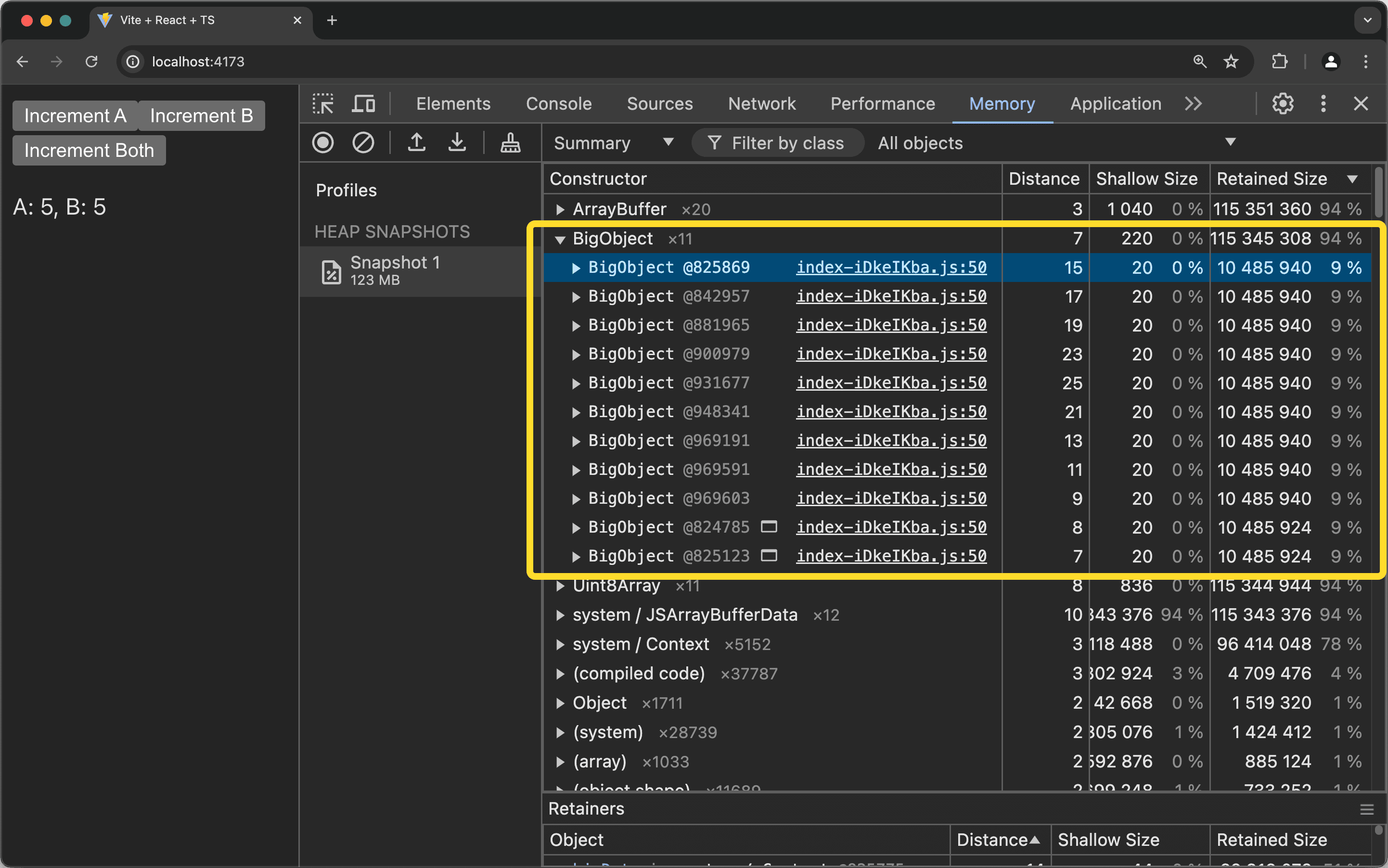

And the memory leak is back. The BigObject is now created on each render and will never get garbage collected. The memory usage will grow with each click on the buttons.

The memory usage grows with each click on the buttons.

The memory usage grows with each click on the buttons.

What if we remove all memoization?

When we faced this issue in production, we removed a lot of memoization to prevent these cross-referenced closures that you can see in the handleClickBoth function. This function references the other two click handlers, which makes it that much harder to reason about the changes that actually invalidate the memoized values.

Now, with the React compiler, we need to take extra care, since we delegate this responsibility to a tool. The code below has no memoization at all:

import { useState } from "react";

class BigObject {

constructor(public state: string) {}

public readonly data = new Uint8Array(1024 * 1024 * 10);

}

export const App = () => {

const [countA, setCountA] = useState(0);

const [countB, setCountB] = useState(0);

const bigData = new BigObject(`${countA}/${countB}`); // 10MB of data

const handleClickA = () => {

setCountA(countA + 1);

};

const handleClickB = () => {

setCountB(countB + 1);

};

// This only exists to demonstrate the problem

const handleClickBoth = () => {

handleClickA();

handleClickB();

console.log(bigData.data.length);

};

return (

<div>

<button onClick={handleClickA}>Increment A</button>

<button onClick={handleClickB}>Increment B</button>

<button onClick={handleClickBoth}>Increment Both</button>

<p>

A: {countA}, B: {countB}

</p>

</div>

);

};

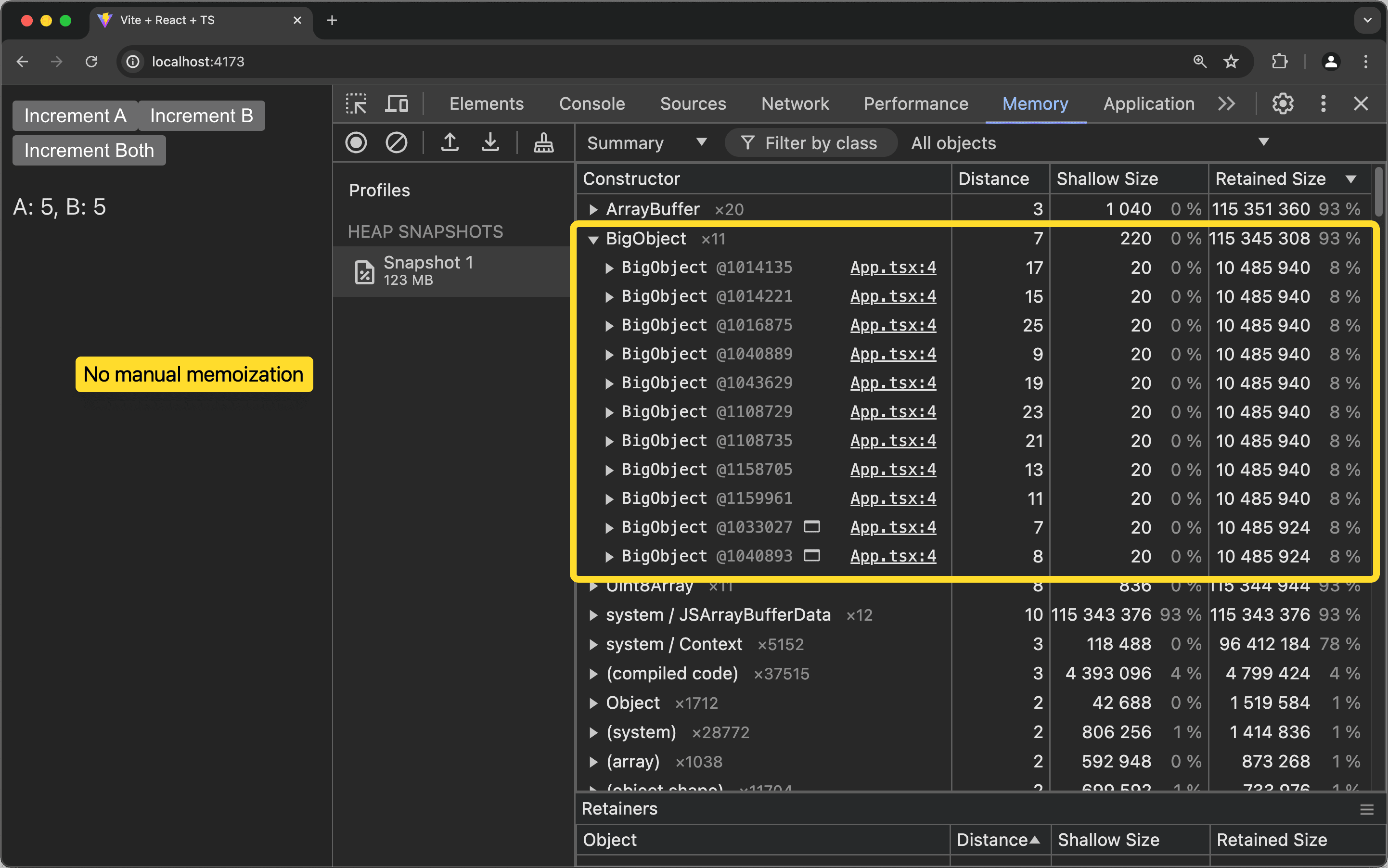

But the memory leak is added back in by the compiler's memoization. For developers who will be new to React after the compiler becomes the default, this might be very hard to realize, debug and fix.

We still have a memory leak, but now the memoization is handled by the compiler.

We still have a memory leak, but now the memoization is handled by the compiler.

An experimental solution, using bind(null, x)

Inspired by some comments to the previous article, one approach to the issue might be with circumventing closures all together. Instead, we could try to pass the required values directly to a function using bind. Using bind, we don't need to rely on the context object that is shared between all closures. This approach is a more verbose, but it might be a good way to prevent those kinds of overreaching closures. DX is not even that bad with TypeScript inference.

import { useState } from "react";

class BigObject {

constructor(public state: string) {}

public readonly data = new Uint8Array(1024 * 1024 * 10);

}

// Generic binNull function to get rid of the closure

// TypeScript inference to improve DX

function bindNull<U extends unknown[]>(f: (args: U) => void, x: U): () => void {

return f.bind(null, x);

}

export const App = () => {

const [countA, setCountA] = useState(0);

const [countB, setCountB] = useState(0);

const bigData = new BigObject(`${countA}/${countB}`); // 10MB of data

const handleClickA = bindNull(

([count, setCount]) => {

setCount(count + 1);

},

[countA, setCountA] as const

);

const handleClickB = bindNull(

([count, setCount]) => {

setCount(count + 1);

},

[countB, setCountB] as const

);

const handleClickBoth = bindNull(

([countA, setCountA, countB, setCountB]) => {

setCountA(countA + 1);

setCountB(countB + 1);

console.log(bigData.data.length);

},

[countA, setCountA, countB, setCountB] as const

);

return (

<div>

<button onClick={handleClickA}>Increment A</button>

<button onClick={handleClickB}>Increment B</button>

<button onClick={handleClickBoth}>Increment Both</button>

<p>

A: {countA}, B: {countB}

</p>

</div>

);

};

This code will not leak and work as expected with the React compiler. I'm in no way sure that this wouldn't have some other unintended side effects, but it's an interesting approach to the problem and possible food for thought. (Disclaimer: I'm no expert on bind and its implications.)

Conclusion

The React compiler is a great tool that will probably make 98% of the codebases out there more performant and easier to read. For the remaining 2%, it might not be enough and even make it harder to reason about the code.

One thing is sure, the compiler won't save you from memory leaks caused by closures. This, in my opinion, is a fundamental problem with JavaScript and how we combine it with functional programming paradigms. Closures in JavaScript (at least V8) are just not designed for fine-grained memory optimization. This is fine if your components mount/unmount quickly or handle smaller amounts of data, but can be a real issue if you have long-lived components with large data dependencies.

Ideas forward might revolve around being explicit about the dependencies of the closures, like in the bind example above. Maybe we can see some smart wrapper that makes this even less verbose and more ergonomic. But, for now, we have to be aware of the issue and, as always, keep the following best practices in mind:

- Write small components.

- Write pure components.

- Write custom functions/hooks.

- Use the memory profiler.

I hope this article gave you some insights into the React compiler and how it handles memory leaks. If you have any questions or feedback, feel free to reach out to me on X or LinkedIn, or leave a comment below. Thanks for reading! 🚀